Recent

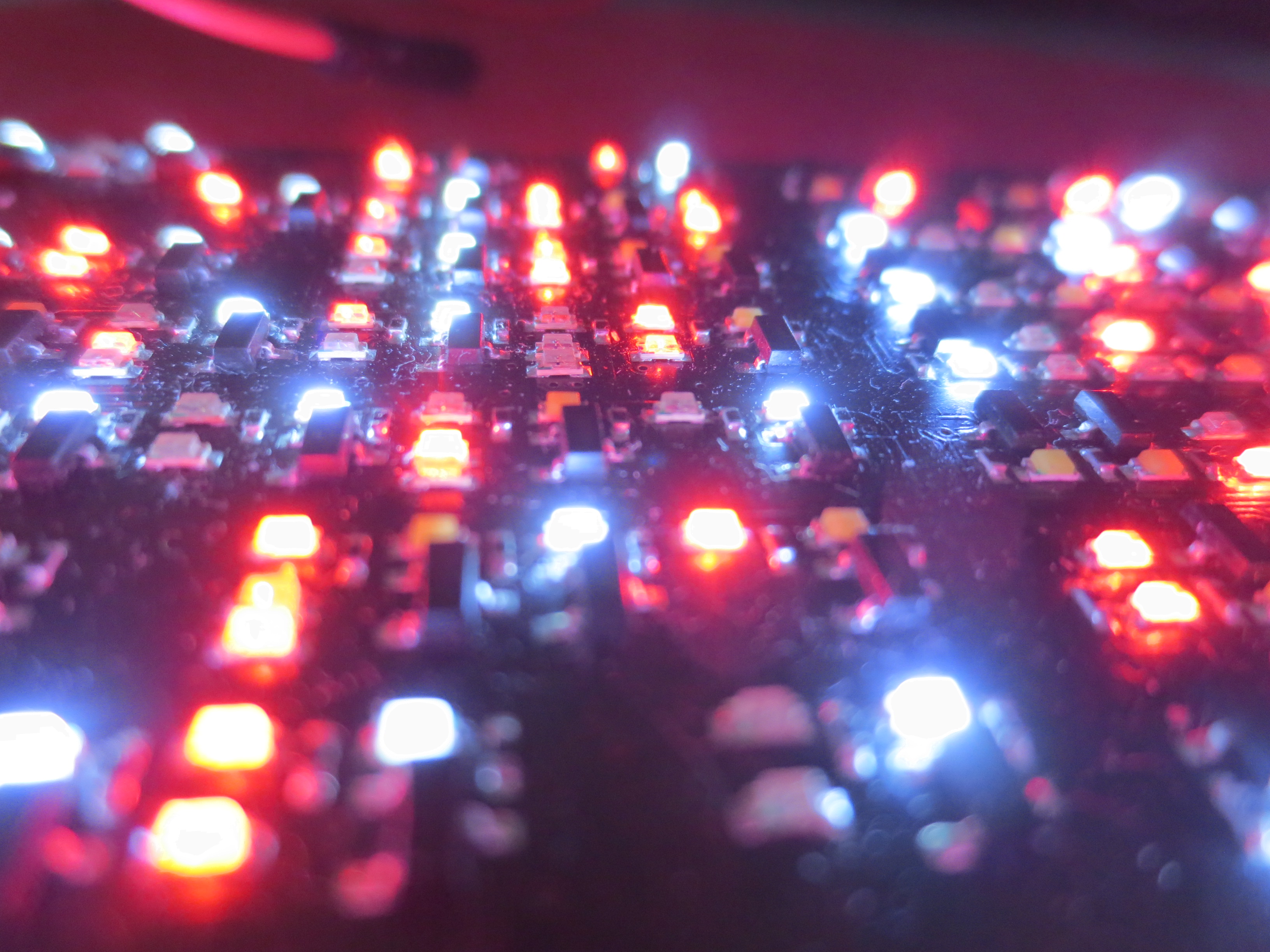

BitNetMCU with CNN: >99.5% MNIST accuracy on a low-end Microcontroller

·2261 words·11 mins

Combining a deep-depthwise CNN architecture with variable quantization in BitNetMCU achieves state-of-the-art MNIST accuracy on a low-end 32-bit microcontroller with 4 kB RAM and 16 kB flash.

Measuring Thinking Efficiency in Reasoning Models: The Missing Benchmark ↗ ↖

·7 words

(Guest article on the Nous Research blog) Anecdotal evidence suggests open weight models produce significantly more tokens for similar tasks than closed weight models. This report systematically investigates these observations. We confirm this trend to be generally true, but observe significant differences depending on problem domain.

Candle Flame Oscillations as a Clock

·1856 words·9 mins

Todays candles have been optimized not to flicker. But it turns out when we bundle three of them together, the resulting triplet will start to naturally oscillate. Amazingly, the frequency is rather stable at ~9.9 Hz as it mainly depends on gravity and diameter of the flame. We detect the oscillation with a suspended wire and divide it down to 1 Hz.

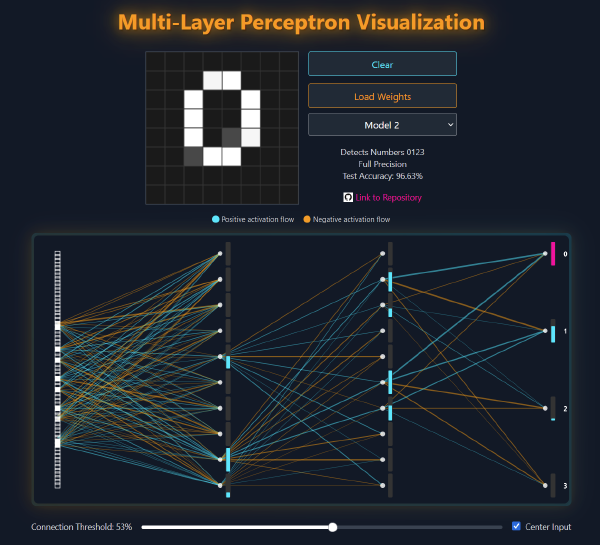

Neural Network Visualization

·148 words·1 min

A browser based interactive application that visualizes simple multi-layer perception (MLP) neural networks for the inference of 8x8 pixel images.

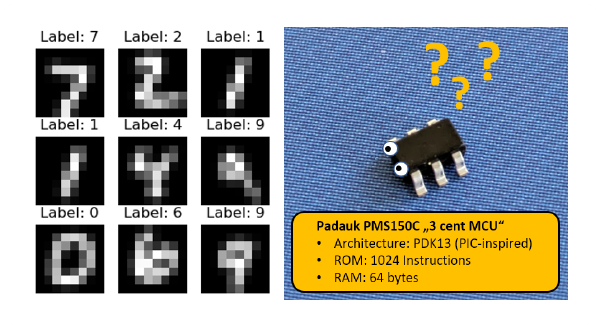

BitNetPDK: Neural Networks (MNIST inference) on the "3-cent" Microcontroller

·1227 words·6 mins

Is it possible to implement reasonably accurate inference of MNIST, the handwritten numbers dataset, on a “3 cent” Microcontroller with only 64 bytes of RAM and 1K of instruction memory?